Nvidia will remain the gold standard for AI training chips, CEO Jensen Huang told investors, even as rivals seek to chip away at its market share and one of Nvidia’s key suppliers gave a moderate forecast for AI chip sales.

Everyone from OpenAI to Elon Musk You’re here rely on Nvidia semiconductors to run their large language or computer vision models. The deployment of Nvidia “Blackwell“The deployment of the system later this year will only solidify that lead,” Huang said at the company’s annual shareholder meeting on Wednesday.

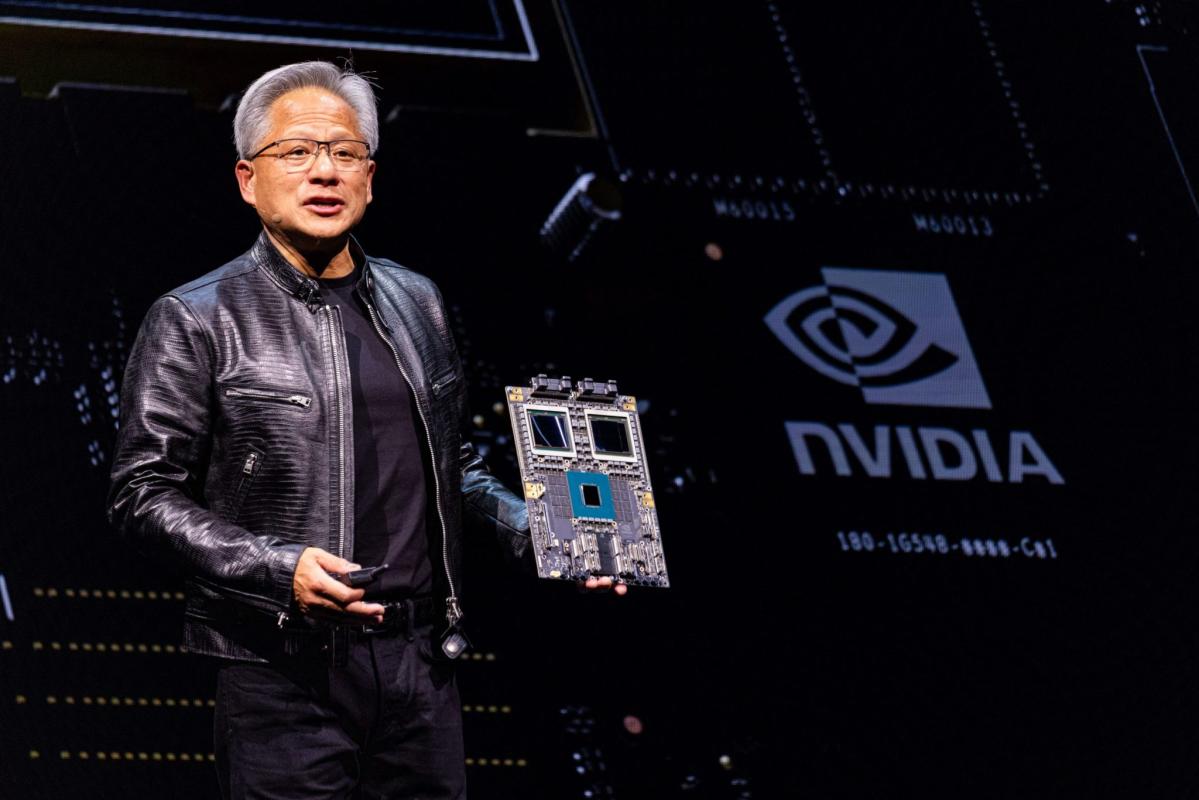

Unveiled in MarchBlackwell is the next generation of AI training processors to follow its flagship “Hopper” line of H100 chips, one of the hottest commodities in the tech industry, fetching prices in the tens of thousands of dollars each.

“The Blackwell architecture platform is probably the most successful product in our history and indeed in the entire history of computing,” Huang said.

Nvidia briefly eclipsed Microsoft And Apple this month to become the world’s most valuable company thanks to a remarkable rally that powered much of this year’s gains in the S&P 500 IndexAt over $3 trillion, Huang’s company was at one point worth more than entire economies and stock marketsonly to undergo a record loss of market value while investors have locked in their profits.

Yet as long as Nvidia chips remain the benchmark for AI training, there will be little reason to believe that Longer term outlook is cloudy and here the fundamentals continue to appear robust.

One of Nvidia’s key advantages is a sticky AI ecosystem known as CUDAshort for Compute Unified Device Architecture. Just as ordinary consumers are reluctant to switch from their Apple iOS device to a Samsung phone using Google Android, a whole cohort of developers have been working with CUDA for years and feel so comfortable that there is little reason to consider using another software platform. Just like hardware, CUDA has become a standard in its own right.

“The Nvidia platform is widely available from all major cloud providers and computer manufacturers, creating a broad and attractive base for developers and customers, making our platform more valuable to our customers,” Huang added Wednesday.

Micron’s Forecast for Next Quarter Revenue Not Enough for Bulls

AI business recently took a hit after memory chip supplier Micron technologya supplier of high-bandwidth memory (HBM) chips to companies like Nvidia, forecast that fiscal fourth-quarter revenue would only match market expectations of about $7.6 billion.

Micron shares plunged 7%, significantly underperforming a slight gain in the broader technology sector. Nasdaq Composite.

In the past, Micron and its Korean rivals Samsung And SK Hynix has experienced boom-and-bust cycles common to the memory chip market, long considered a commodity sector compared to logic chips such as graphics processors.

But enthusiasm has grown because of demand for its chips needed to train artificial intelligence. Micron’s stock price has more than doubled in the past 12 months, meaning investors have already priced in much of the growth management is predicting.

“The forecasts were basically in line with expectations and in the AI hardware world, if you follow those lines, it’s considered a slight disappointment,” said Gene Munster, a technology investor at Deepwater Asset Management. “Momentum investors just didn’t see that additional reason to be more positive about the story.”

Analysts are closely tracking high-bandwidth memory demand as a leading indicator for the AI sector because it is crucial to solving the biggest economic constraint facing AI education today: the question of scaling.

HBM chips solve scaling problem in AI training

Costs do not increase with the complexity of a model (the number of parameters it has, which can reach billions), but rather exponentially. This results in diminishing efficiency returns over time.

Even if revenues grow at a steady pace, losses are likely to run into the billions or even tens of billions per year as the model becomes more advanced. This threatens to overwhelm any company that doesn’t have a deep-pocketed investor like Microsoft who can ensure OpenAI can still “pay the bills”, as CEO Sam Altman recently put it.

One of the main reasons for declining returns is the growing gap between the two factors that dictate AI training performance. The first is the raw computing power of a logic chip, measured by FLOPS, a type of calculation per second, and the second is the memory bandwidth required to deliver data to it quickly, often expressed in millions of transfers per second, or MT/s.

As they work in tandem, scaling one without the other simply leads to waste and cost inefficiency. This is why FLOPS utilization, or the amount of compute that can actually be used, is a key metric for assessing the cost-effectiveness of AI models.

Sold out until the end of next year

As Micron points outData transfer rates have not been able to keep pace with the increase in computing power. The resulting bottleneck, often referred to as the “memory wall,” is one of the main causes of today’s inherent inefficiency when scaling AI training models.

This explains why the US government has focused heavily on memory bandwidth when deciding which specific Nvidia chips should be banned from export to China in order to weaken Beijing’s AI development agenda.

On Wednesday, Micron said its HBM business was “sold out” through the end of next calendar year, which is one quarter into its fiscal year, echoing Similar comments from the Korean competitor SK Hynix.

“We expect to generate several hundred million dollars of revenue from HBM in FY24 and several (billions of dollars) of revenue from HBM in FY25,” Micron said Wednesday.

This story was originally featured on Fortune.com